环境准备

注意:master的CPU核数至少需要2核

| 节点 | IP | 系统配置 |

| master | 192.168.1.131 | Centos7.5 || 2c || 2g |

| node1 | 192.168.1.122 | Centos7.5 || 2c || 2g |

| node2 | 192.168.1.125 | Centos7.5 || 2c || 2g |

部署K8S

三个节点全部执行以下命令

#设置主机名

cat >> /etc/hosts <<EOF

192.168.1.131 master

192.168.1.122 node01

192.168.1.125 node02

EOF

#基础环境

yum install -y wget expect vim net-tools ntp bash-completion ipvsadm ipset jq iptables conntrack sysstat libseccomp openssh-clients

systemctl stop firewalld && systemctl disable firewalld

setenforce 0

sed -i 's/^SELINUX=.*/SELINUX=disabled/' /etc/selinux/config

swapoff -a

sed -i '/ swap / s/^\(.*\)$/#\1/g' /etc/fstab

echo 'KUBELET_EXTRA_ARGS="--fail-swap-on=false"' > /etc/sysconfig/kubelet

#设定亚洲时区

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' > /etc/timezone

yum install -y ntp

ntpdate ntp1.aliyun.com

echo '*/1 * * * * /usr/sbin/ntpdate ntp1.aliyun.com &>/dev/null' >>/var/spool/cron/root

#配置hosts文件

cat >> /etc/hosts << EOF

192.168.1.131 k8s-master

192.168.1.122 k8s-node01

192.168.1.125 k8s-node02

EOF

#内核优化

cat << EOF | tee /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp.keepaliv.probes = 3

net.ipv4.tcp_keepalive_intvl = 15

net.ipv4.tcp.max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp.max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.top_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

#升级内核版本(每个节点都要执行) ---- 由于 Docker 运行需要较新的系统内核功能,例如 ipvs 等等,所以一般情况下,我们需要使用 4.0+ 以上版本的系统内核,如果是 CentOS 8 则不需要升级内核,我用的是5.4内核

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-5.4.187-1.el7.elrepo.x86_64.rpm

wget https://elrepo.org/linux/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.187-1.el7.elrepo.x86_64.rpm

yum localinstall -y kernel-lt*

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --default-kernel

reboot

#免密登录(这个只在master登录)

ssh-keygen -t rsa

for i in master node01 node02 ; do ssh-copy-id -i ~/.ssh/id_rsa.pub root@$i ; done

#安装Docker

yum -y install yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum makecache fast

yum -y install docker-ce

systemctl start docker && systemctl enable docker

mkdir -p /etc/docker

sudo tee /etc/docker/daemon.json <<-'EOF'

{

"registry-mirrors": ["https://4vlknd55.mirror.aliyuncs.com"]

}

EOF

#因为默认k8s镜像仓库需要翻出去,所以配置国内镜像地址

cat > /etc/yum.repos.d/kubernetes.repo << EOF

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

yum install -y kubectl-1.20.2 kubelet-1.20.2 kubeadm-1.20.2

systemctl enable kubelet && systemctl start kubelet

以下操作只需要在master中执行

#记得将192.168.1.131修改为master的ip地址,其余不变.需要等待几分钟才可执行完成.会提示[WARNING IsDockerSystemdCheck]: detected "cgroupfs" as the Docker cgroup driver.只是警告可忽略.(如果master的cpu核数只有1核,这里还会提示cpu核数达不到要求的2核) kubeadm init \ --image-repository=registry.cn-hangzhou.aliyuncs.com/k8sos \ --kubernetes-version=v1.20.2 \ --service-cidr=10.1.0.0/12 \ --pod-network-cidr=10.244.0.0/16 #执行完上一条语句后,会有类似如下命令的提示,根据提示执行即可.还有一条kubeadm join 192.168.2.130:6443 --token,这个是用来在node上执行,加入到k8s集群中的,我们稍后需要用到 mkdir -p $HOME/.kube sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config sudo chown $(id -u):$(id -g) $HOME/.kube/config

以下操作在两个node中执行

#不要直接复制这里的,是kubeadm init执行完成后,后面提示的那条语句.就是我们刚才说的那个

kubeadm join 192.168.1.131:6443 --token c1qboh.pg159xmk61z5rbeg \

--discovery-token-ca-cert-hash sha256:ff09b3a7b0989de094b73e811bf378d8ab1cf3c1e413e3753ebd5de826075931

以下操作在master执行

#配置calico网络 wget https://docs.projectcalico.org/manifests/calico.yaml kubectl apply -f calico.yaml #可能需要一分钟左右启动初始化完成,才有返回结果.然后再执行下一步 kubectl get nodes #可能需要等待几分钟,状态才能全部转为ready.然后再执行下一步 kubectl get pod -n kube-system #可能需要等待几分钟,直至所有状态才能全部转为ready 1/1.然后再执行下一步

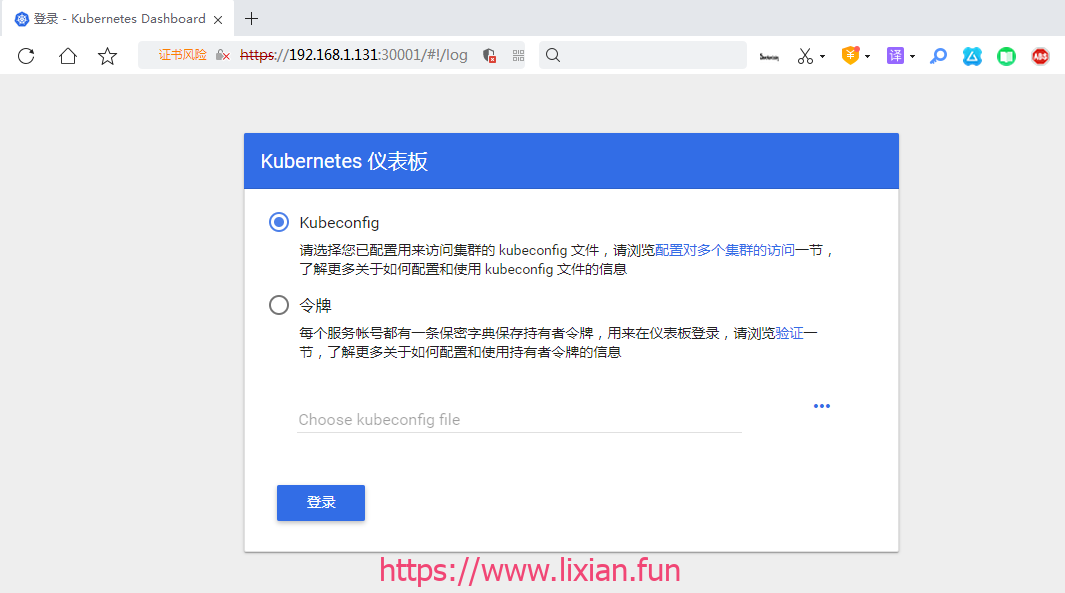

#在其中一台node上部署rancher docker run --privileged -d --restart=always --name rancher -p 80:80 -p 443:443 rancher/rancher:stable #配置k8s UI界面 wget https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yaml vim kubernetes-dashboard.yaml #修改以下行对应内容,注意不能使用tab键,只能使用空格进行对启,注意格式要按照原来的,不能多一个空格,也不能少一个空格 ...... 111 - name: kubernetes-dashboard 112 image: lizhenliang/kubernetes-dashboard-amd64:v1.10.1 # 替换此行 ...... 157 spec: 158 type: NodePort # 增加此行 159 ports: 160 - port: 443 161 targetPort: 8443 162 nodePort: 30001 # 增加此行 163 selector: 164 k8s-app: kubernetes-dashboard ...... kubectl apply -f kubernetes-dashboard.yaml #现在我们可以访问https://192.168.1.131:30001.当然也需要等一会.注意是https

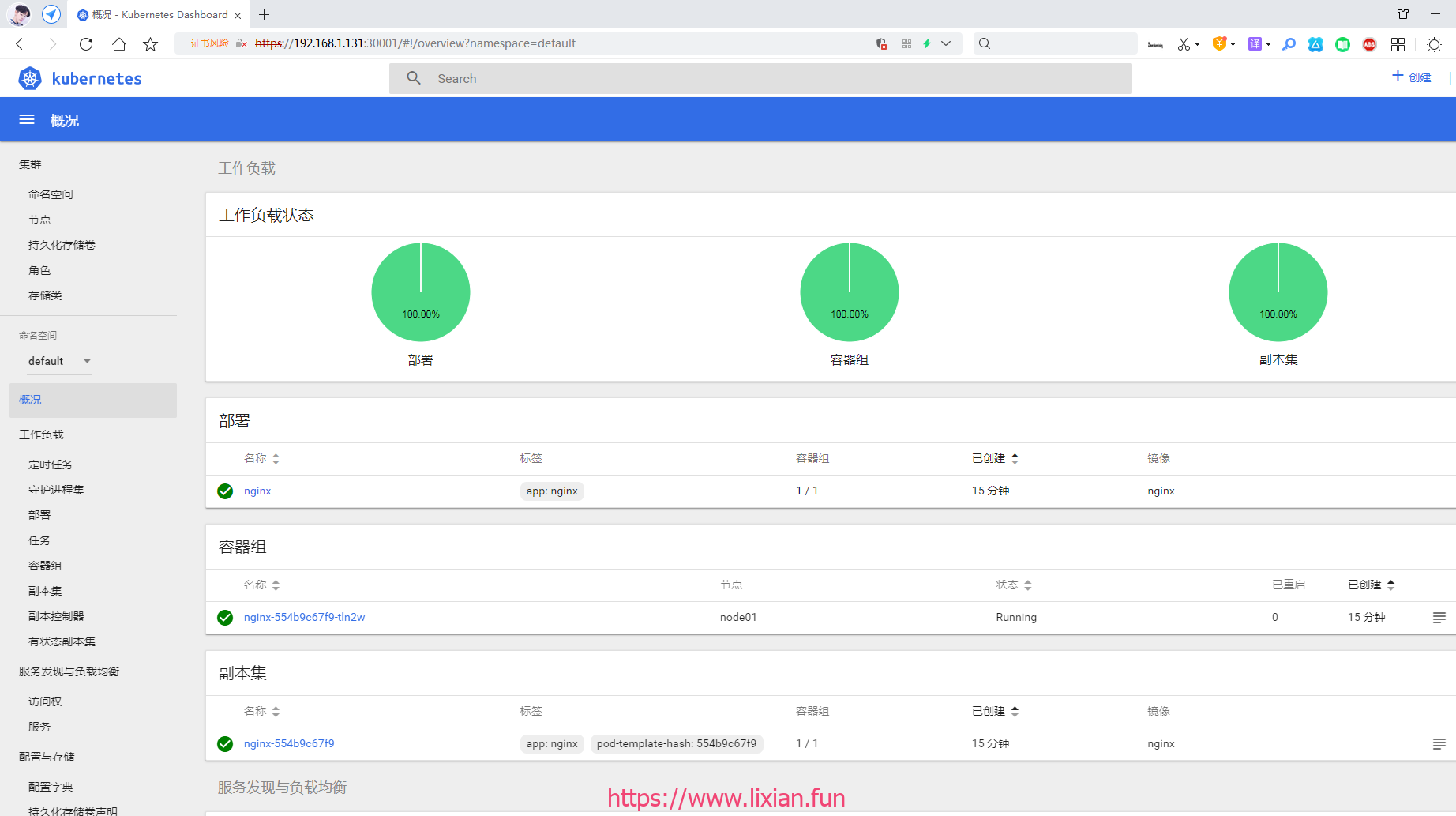

访问K8S UI界面,效果:(会提示链接不安全,点击高级->接受风险并继续即可)

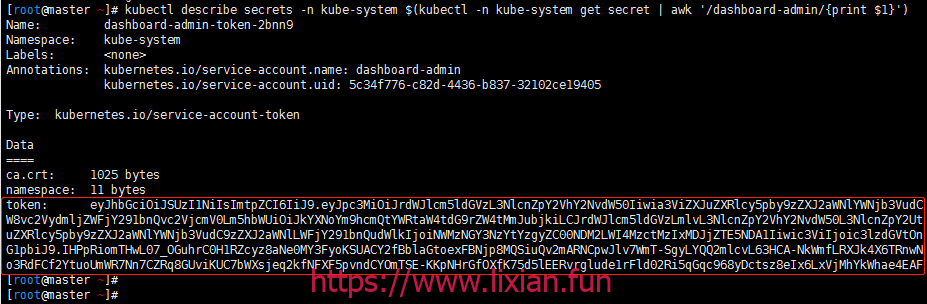

#创建管理员帐号

kubectl create serviceaccount dashboard-admin -n kube-system

#配置管理员帐号为集群管理员帐号

kubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin

#执行以下命令后,会生成对应的token,在https://192.168.1.131:30001中选择token,输入该token即可登陆k8s管理后台

kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')